Out Of This World Info About Can Tableau Handle 3 Million Rows Python Draw Contour

Aggregate the data if possible to reduce the number of rows.

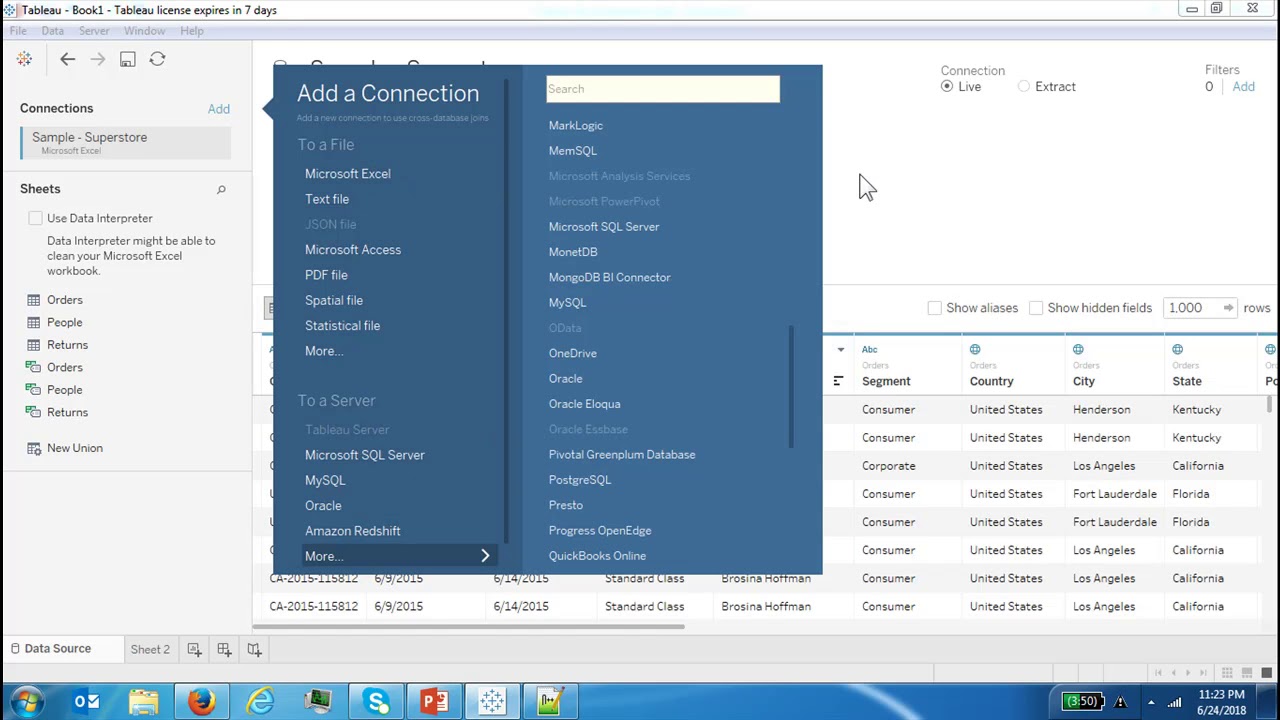

Can tableau handle 3 million rows. You can extract all rows or the topn rows. What is the best way to load this data ? Hide the columns not used, query load is reduced drastically if you are able to reduce the number of columns (tableau extract is really sensitive to the number of columns).

The number of rows options depend on the type of data source you are extracting from. The only real limit on how much tableau can handle is how many points are displayed. Tableau can manage datasets in the order of tens of millions to potentially hundreds of millions of rows, although exact numbers will vary depending on the specific circumstances and configuration.

Tableau normally performs better with long data as opposed to wide data. Hi neha, the short answer is, tableau can handle 50 million rows. Avoid using tableau's custom sql dialogue.

I put a simple dashboard together on friday with about 30 million rows (the full set will be ~100 million with 3 dimension tables (will be 5 when its done). And we want the tableau charts refresh to happen within 1sec of time. Tableau normally performs better with long data as opposed to wide data.

A tableau data extract (tde) is a subset of data that you can use to improve the performance of your workbook, upgrade your data to allow for more advanced capabilities, and to make it possible to analyze your data offline. Try to limit the number of columns when connecting to data with millions of rows. Or better, make an extract with filters that reduces the number of rows to a small subset, and hide the unused fields to reduce the number of columns.

However, some tips would be: That 30 million records are already aggregated so we cannot reduce. Using extract loading a sheet with all the rows on one sheet that the end user can then export to csv (lol) seems to be impossible as the query executes forever.

And that depends on ram. Here's a few things to watch for. Here's a few things to watch for.

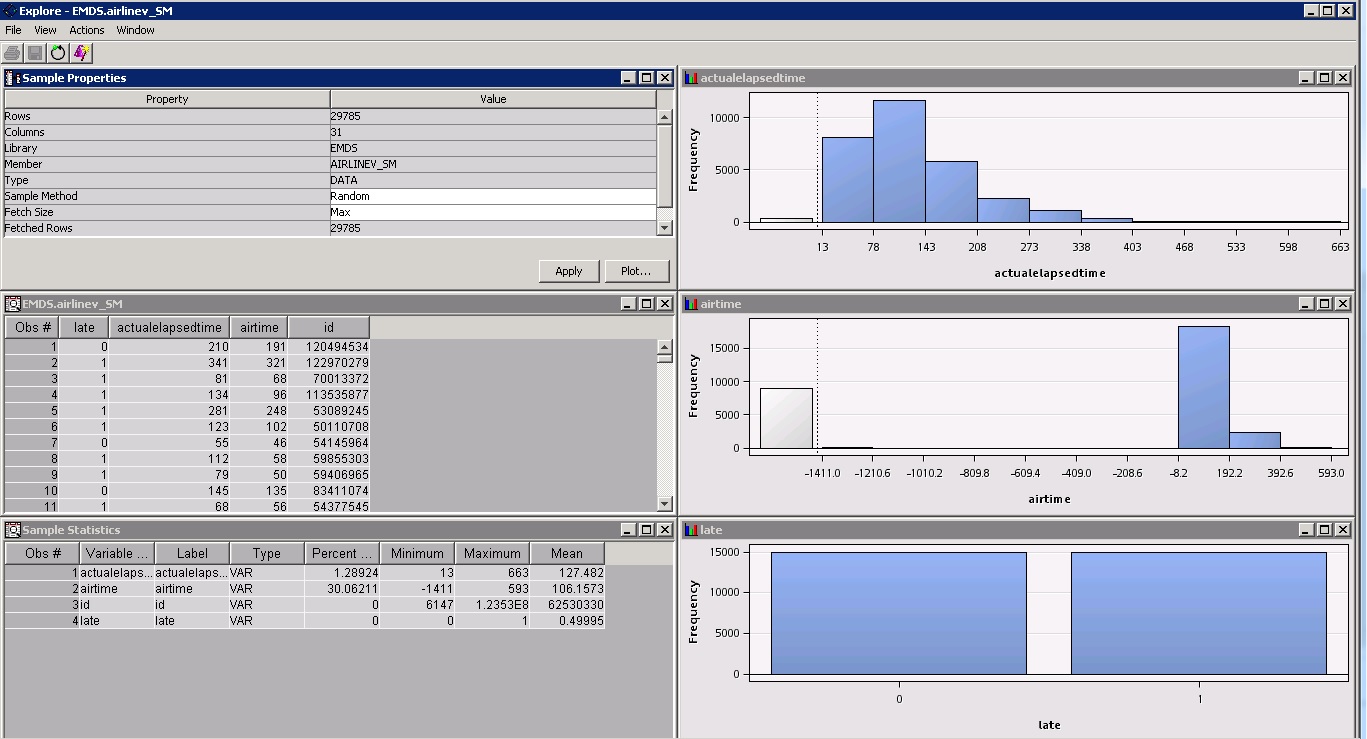

For most data types, your data set can have up to 4 columns and still maintain the 1 million row limit. It can with power query in excel. The data is stored on teradata and it performed fairly well.

There aren't any recommendations for maximum number of rows. I have a use case where i need to have a structured data (with 30 million records & 20 columns) in a tableau compatible source. You can reduce the size of your data source by removing columns, filtering rows or consolidating (aggregating) rows.

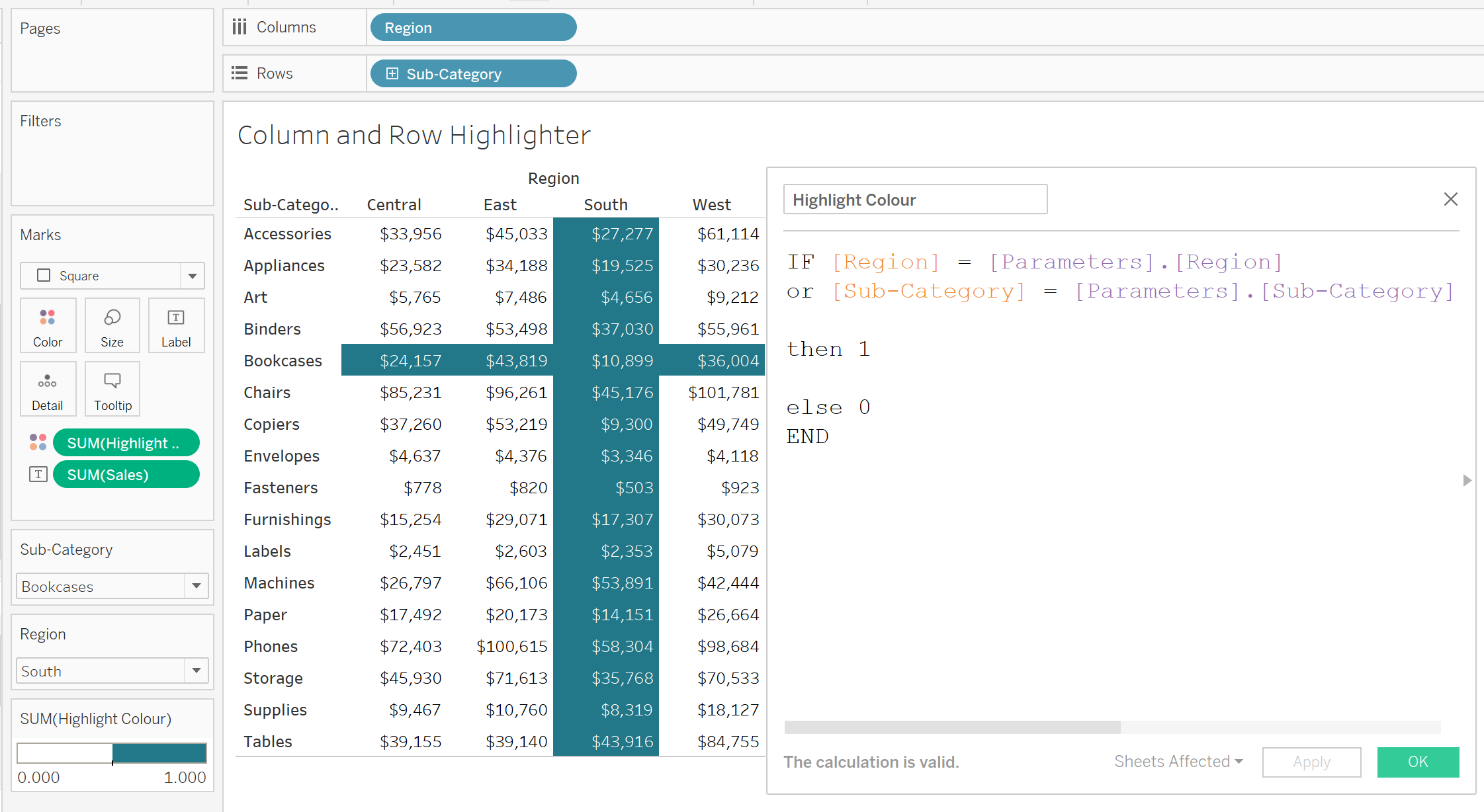

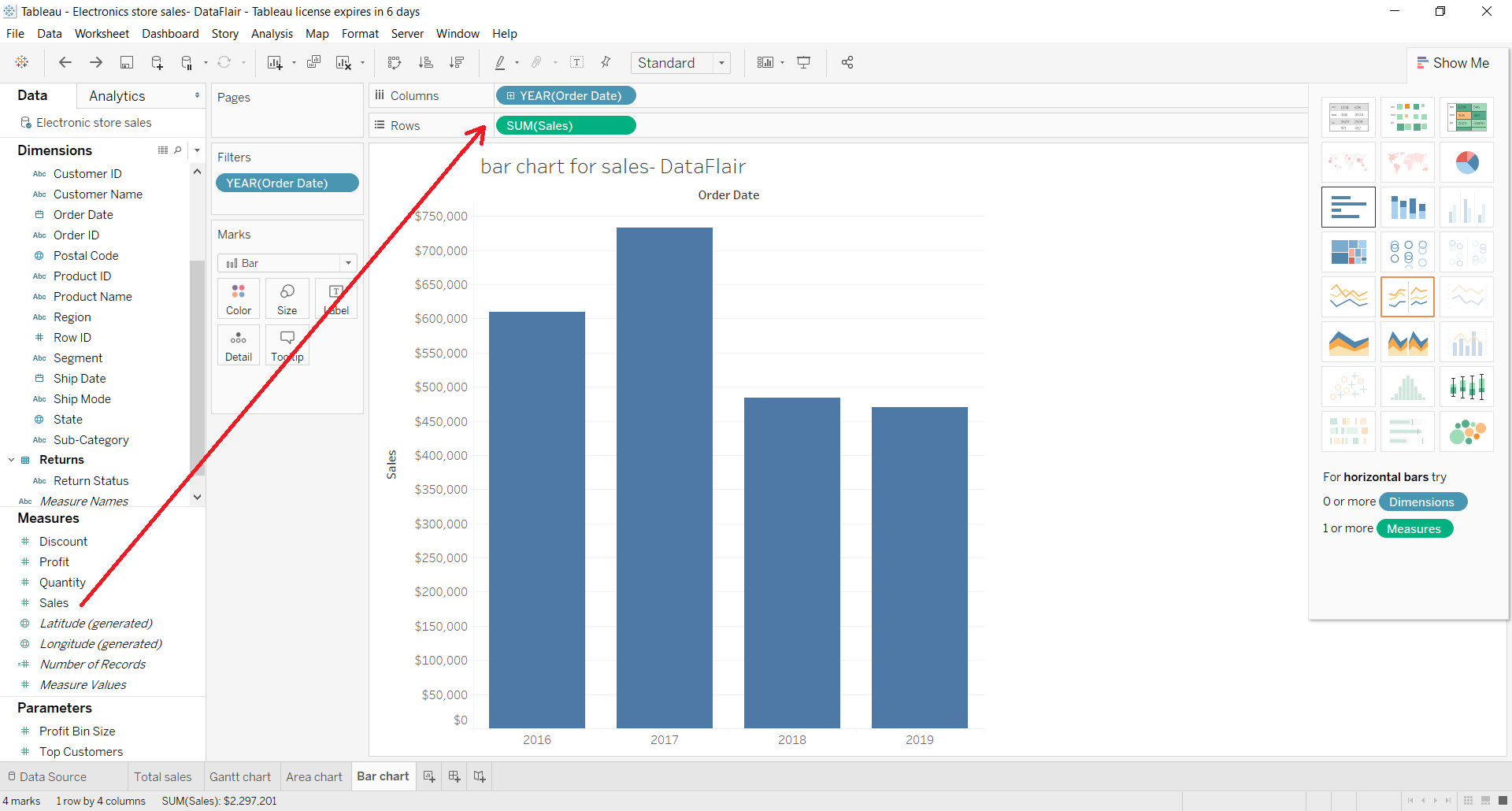

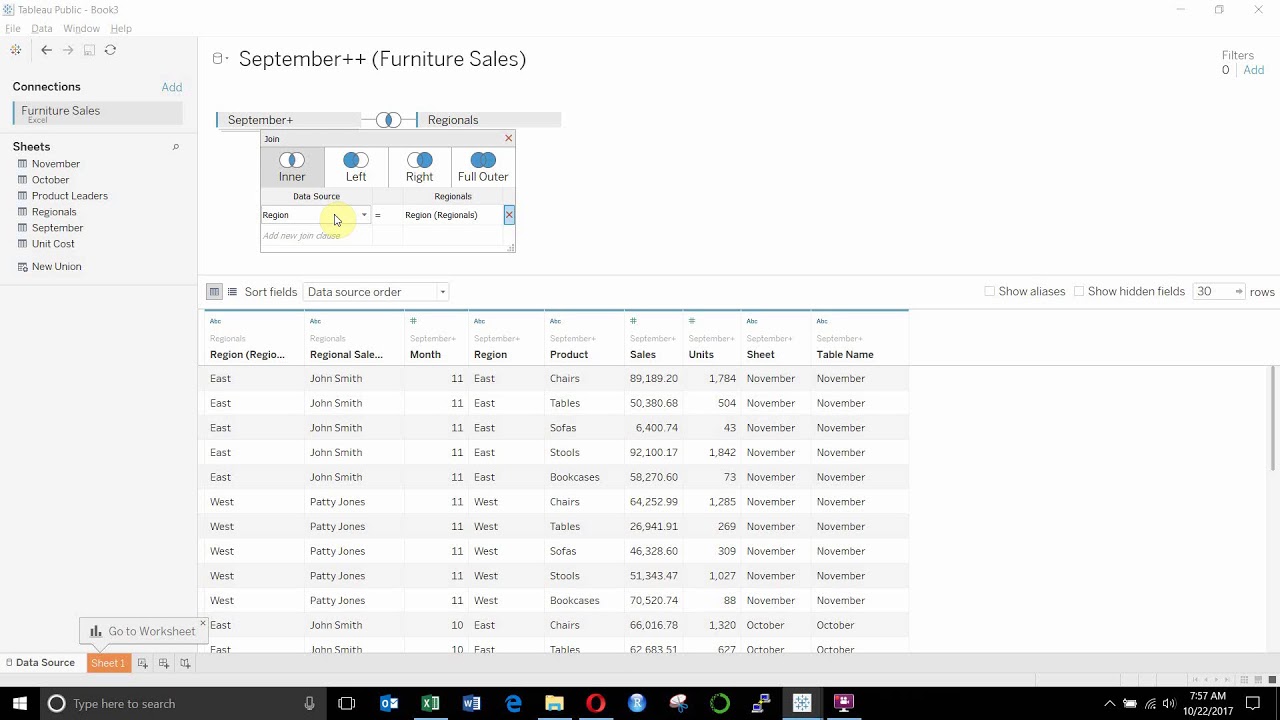

Due to how they require the unique identifiers based on the filters selected, we can't really even break up the data set into smaller tables so tableau can handle it. Is there any way to do this successfully? Let’s dive into an example together.